1. Introduction and Goals

5 minutes to read

| Jump directly to the github repository |

1.1. Create awesome docs!

docToolchain is an implementation of the docs-as-code approach for software architecture plus some additional automation. The basis of docToolchain is the philosophy that software documentation should be treated in the same way as code together with the arc42 template for software architecture.

How it all began…

1.1.1. docs-as-code

Before this project started, I wasn’t aware of the term docs-as-code. I just got tired of keeping all my architecture diagrams up to date by copying them from my UML tool over to my word processor.

As a lazy developer, I told myself ‘there has to be a better way of doing this’. And I started to automate the diagram export and switched from a full fledged word processor over to a markup renderer. This enabled me to reference the diagrams from within my text and update them just before I render the document.

1.1.2. arc42

Since my goal was to document software architectures, I was already using arc42, a template for software architecture documentation. At that time, I used the MS Word template.

But what is arc42?

Dr. Gernot Starke and Peter Hruschka created this template in a joint effort to create a standard for software architecture documents. They dumped all their experience about software architectures not only into a structure but also explaining texts. These explanations are part of every chapter of the template and give you guidance on how to write each chapter of the document.

arc42 is available in many formats like MS Word, textile, and Confluence. All these formats are automatically generated from one golden master formatted in AsciiDoc.

1.1.3. docToolchain

In order to follow the docs-as-code approach, you need a build script that automates steps like exporting diagrams and rendering the used Markdown (AsciiDoc in case of docToolchain) to the target format.

Unfortunately, such a build script is not easy to create in the first place (‘how do I create .docx?’, ‘why does lib x not work with lib y?’) and it is also not too easy to maintain.

docToolchain is the result of my journey through the docs-as-code land. The goal is to have an easy to use build script that only has to be configured and not modified and that is maintained by a community as open source software.

The technical steps of my journey are written down in my blog: https://rdmueller.github.io.

Let’s start with what you’ll get when you use docToolchain…

1.2. Benefits of the docs-as-code Approach

You want to write technical docs for your software project. So it is likely you already have the tools and processes to work with source code in place. Why not also use it for your docs?

1.2.1. Document Management System

By using a version control system like Git, you get a perfect document management system for free. It lets you version your docs, branch them and gives you an audit trail. You are even able to check who wrote which part of the docs. Isn’t that great?

Since your docs are now just plain text, it is also easy to do a diff and see exactly what has changed.

And when you store your docs in the same repository as your code, you always have both in sync!

1.2.2. Collaboration and Review Process

Git as a distributed version control system even enables collaboration on your docs. People can fork the docs and send you pull requests for the changes they made. By reviewing the pull request, you have a perfect review process out of the box - by accepting the pull request, you show that you’ve reviewed and accepted the changes. Most Git frontends like Bitbucket, GitLab and of course GitHub also allow you to reject pull requests with comments.

1.2.3. Image References and Code Snippets

Instead of pasting images to a binary document format, you now can reference images. This will ensure that those images are always up to date every time you rebuild your documents.

In addition, you can reference code snippets directly from your source code. This way, these snippets are also always up to date!

1.2.4. Compound and Stakeholder-Tailored Docs

Since you can not only reference images and code snippets but also sub-documents, you can split your docs into several sub-documents and a master, which brings all those docs together. But you are not restricted to one master — you can create master docs for different stakeholders that only contain the chapters needed for them.

1.2.5. Many more Features…

If you can dream it, you can script it.

-

Want to include a list of open issues from Jira? ✓ Check.

-

Want to include a changelog from Git? ✓ Check.

-

Want to use inline, text based diagrams? ✓ Check.

-

and many more…

2. How to install docToolchain

4 minutes to read

2.1. Get the tool

To start with docToolchain you need to get a copy of the current docToolchain repository.

The easiest way is to clone the repository without history and remove the .git folder:

git clone --recursive https://github.com/docToolchain/docToolchain.git <docToolchain home>

cd <docToolchain home>

rm -rf .git

rm -rf resources/asciidoctor-reveal.js/.git

rm -rf resources/reveal.js/.git--recursive option is required because the repository contains 2 submodules - resources/asciidoctor-reveal.js and resources/reveal.js.

Another way is to download the zipped git repository and rename it:

wget https://github.com/docToolchain/docToolchain/archive/master.zip

unzip master.zip

# fetching dependencies

cd docToolchain-master/resources

rm -d reveal.js

wget https://github.com/hakimel/reveal.js/archive/tags/3.9.2.zip -O reveal.js.zip

unzip reveal.js.zip

mv reveal.js-tags-3.9.2 reveal.js

rm -d asciidoctor-reveal.js

wget https://github.com/asciidoctor/asciidoctor-reveal.js/archive/v2.0.1.zip -O asciidoctor-reveal.js.zip

unzip asciidoctor-reveal.js.zip

mv asciidoctor-reveal.js-2.0.1 asciidoctor-reveal.js

mv docToolchain-master <docToolchain home>If you work (like me) on a Windows environment, just download and unzip the repository as well as its dependencies: reveal.js and asciidoctor-reveal.js.

After unzipping, put the dependencies in resources folder, so that the structure is the same as on GitHub.

You can add <docToolchain home>/bin to your PATH or you can run doctoolchain with full path if you prefer.

2.2. Initialize directory for documents

The next step after getting docToolchain is to initialize a directory where your documents live. In docToolchain this directory is named "newDocDir" during initialization, or just "docDir" later on.

2.2.1. Existing documents

If you already have some existing documents in AsciiDoc format in your project, you need to put the configuration file there to inform docToolchain what and how to process.

You can do that manually by copying the contents of template_config directory.

You can also do that by running initExisting task.

cd <docToolchain home>

./gradlew -b init.gradle initExisting -PnewDocDir=<your directory>You need to open Config.groovy file and configure names of your files properly. You may also change the PDF schema file to your taste.

docToolchain by default uses src/docs as the directory of your documentation.

If you use a different directory you need to go to <docToolchain home> and set the inputPath property in gradle.properties to your/path.

|

2.2.2. arc42 from scratch

If you don’t have existing documents yet, or if you need a fresh start, you can get the arc42 template in AsciiDoc format.

You can do that by manually downloading from https://arc42.org/download.

You can also do that by running the

initArc42<language> task.

Currently supported languages are:

-

DE - German

-

EN - English

-

ES - Spanish

-

RU - Russian

cd <docToolchain home>

./gradlew -b init.gradle initArc42EN -PnewDocDir=<newDocDir>The Config.groovy file is then preconfigured to use the downloaded template.

| Blog-Post: Let’s add Content! |

2.3. Build

This should already be enough to start a first build.

By now, docToolchain should be installed as command line tool and the path to its bin folder should be on your path.

If you now switch to your freshly initialized <newDocDir>, you should be able to execute the following commands:

doctoolchain <docDir> generateHTML

doctoolchain <docDir> generatePDFdoctoolchain.bat <docDir> generateHTML

doctoolchain.bat <docDir> generatePDF<docDir> may be relative, e.g. ".", or absolute.

As a result, you will see the progress of your build together with some warnings which you can just ignore for the moment.

The first build generated some files within the <docDir>/build:

build

|-- html5

| |-- arc42-template.html

| `-- images

| |-- 05_building_blocks-EN.png

| |-- 08-Crosscutting-Concepts-Structure-EN.png

| `-- arc42-logo.png

`-- pdf

|-- arc42-template.pdf

`-- images

|-- 05_building_blocks-EN.png

|-- 08-Crosscutting-Concepts-Structure-EN.png

`-- arc42-logo.pngCongratulations! If you see the same folder structure, you just managed to render the standard arc42 template as html and pdf!

If you didn’t get the right output, please raise an issue on github

| Blog-Posts: Behind the great Firewall, Enterprise AsciiDoctor |

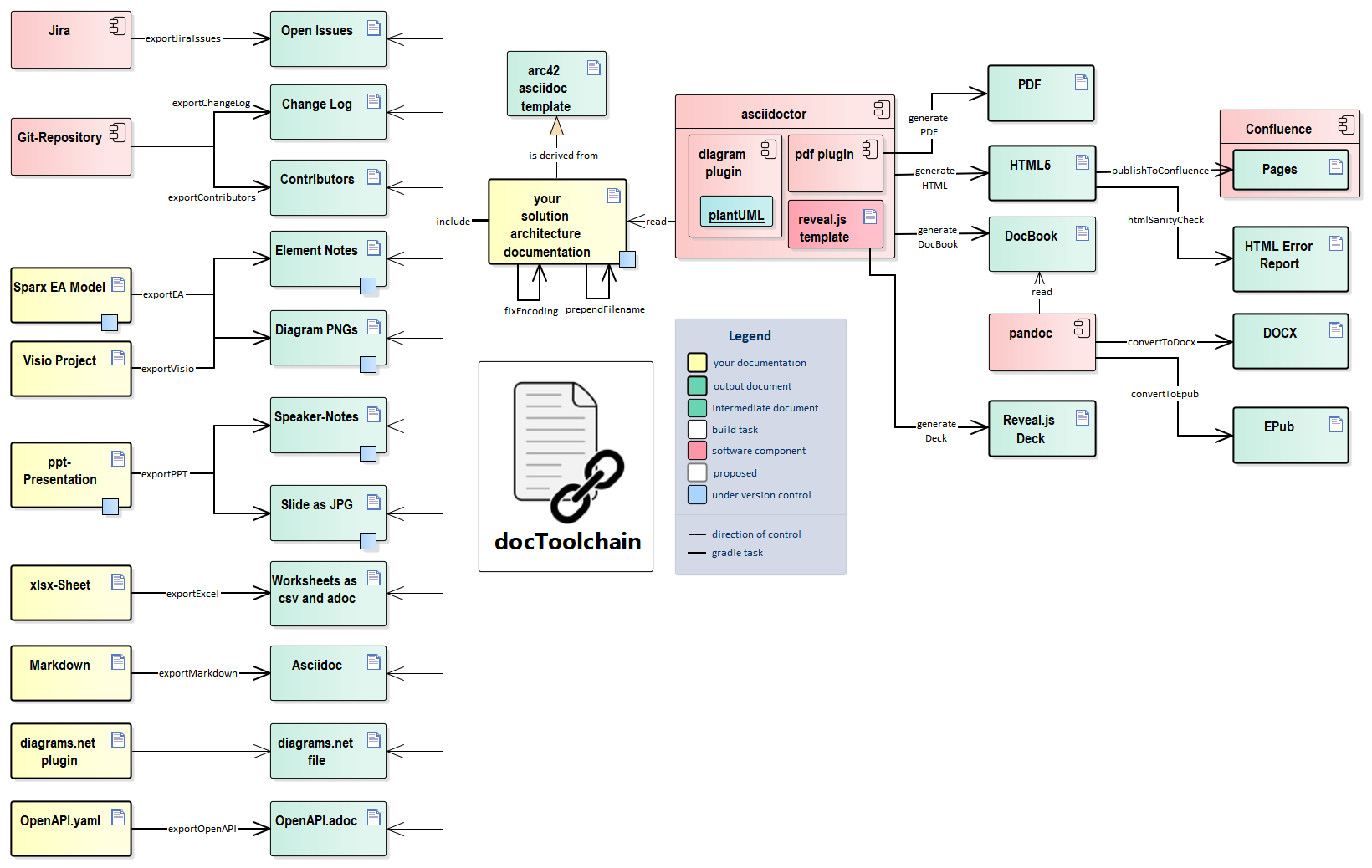

3. Overview of available Tasks

2 minutes to read

This chapter explains all docToolchain specific tasks.

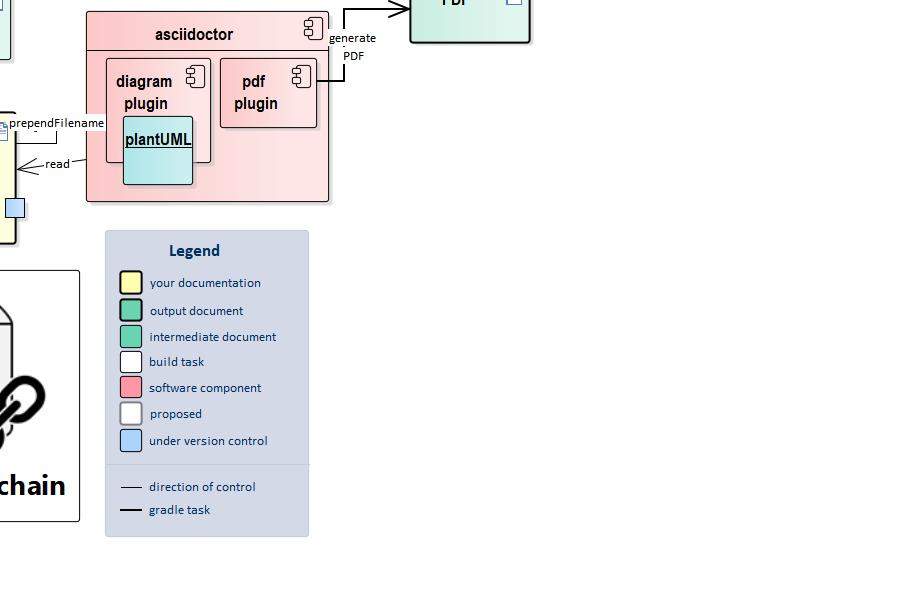

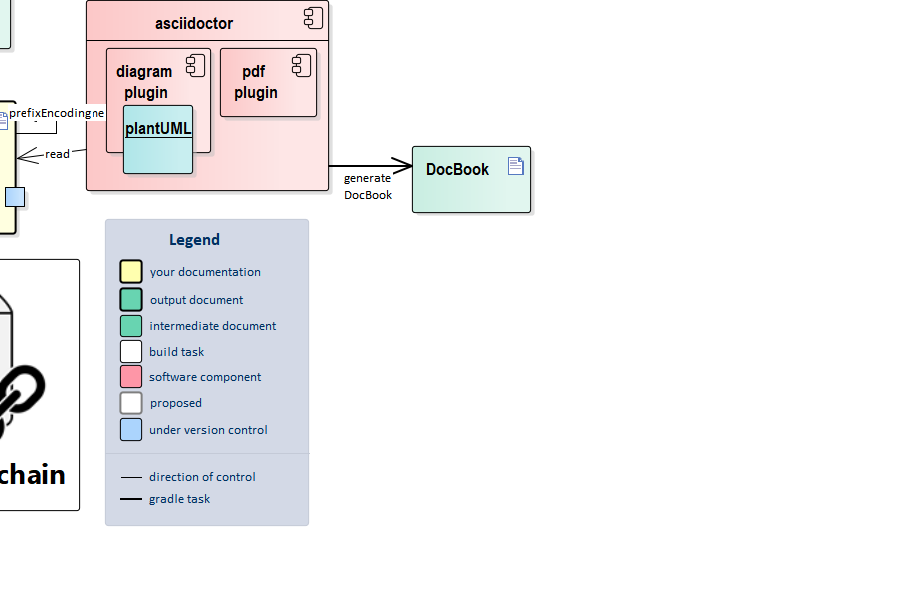

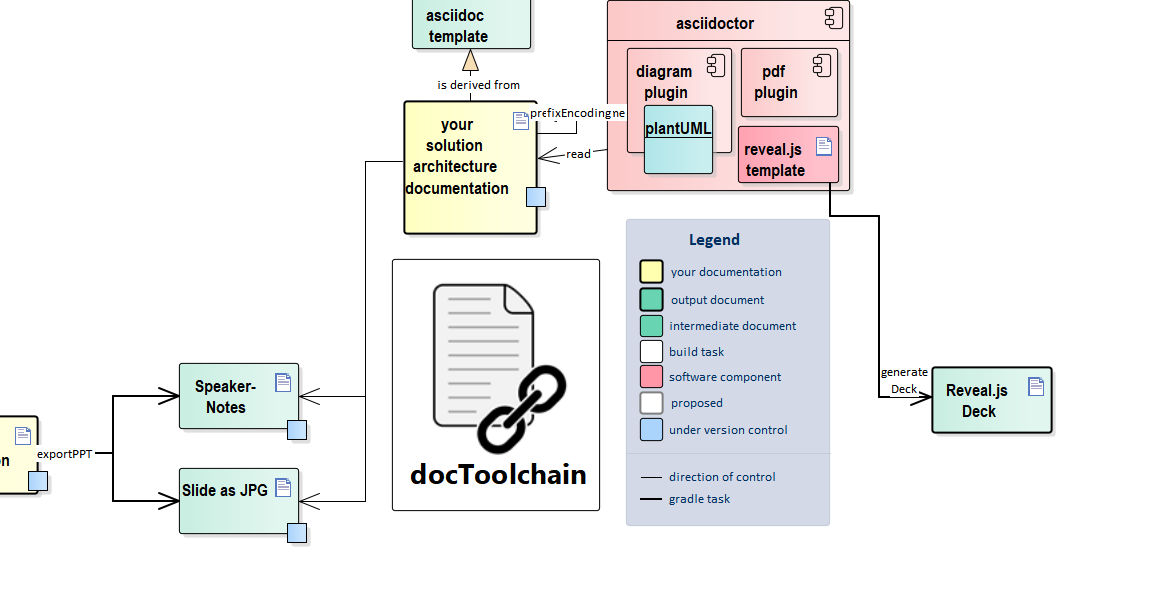

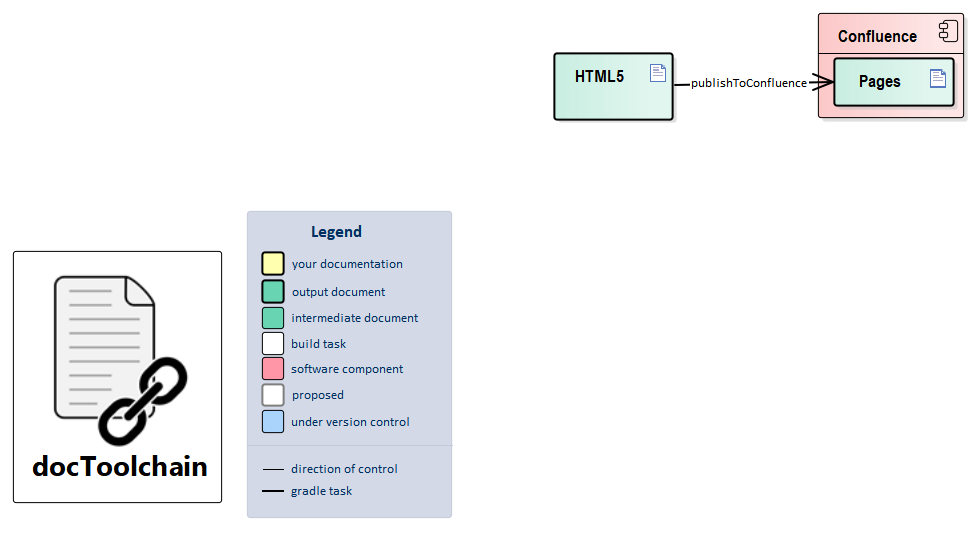

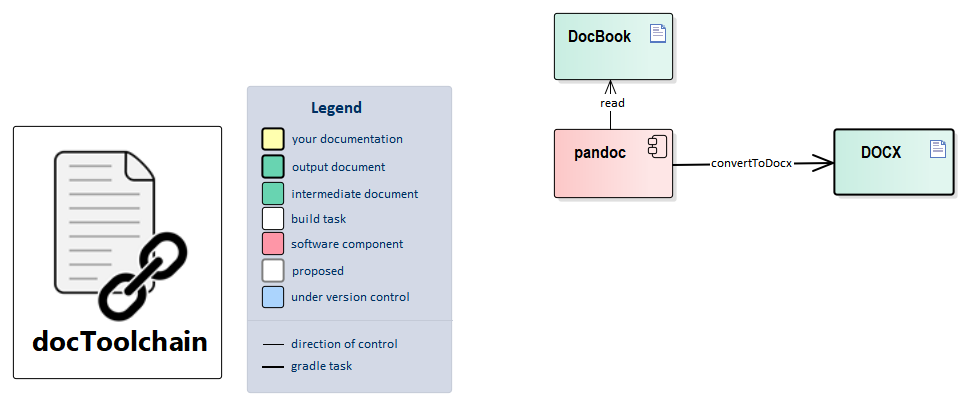

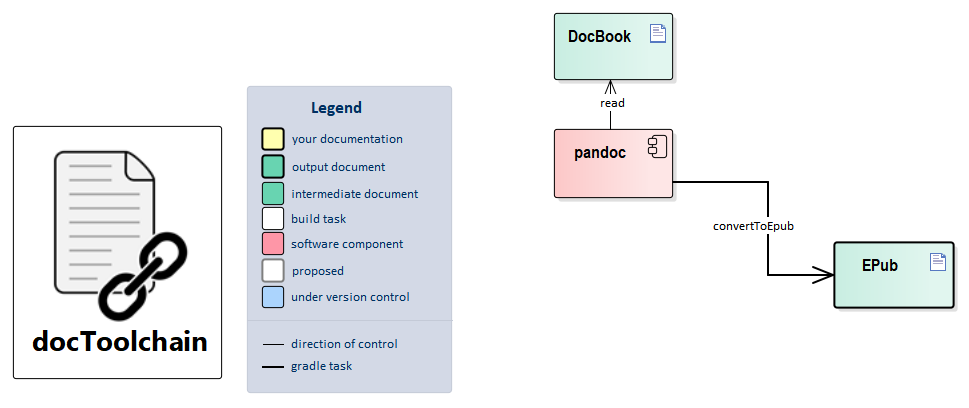

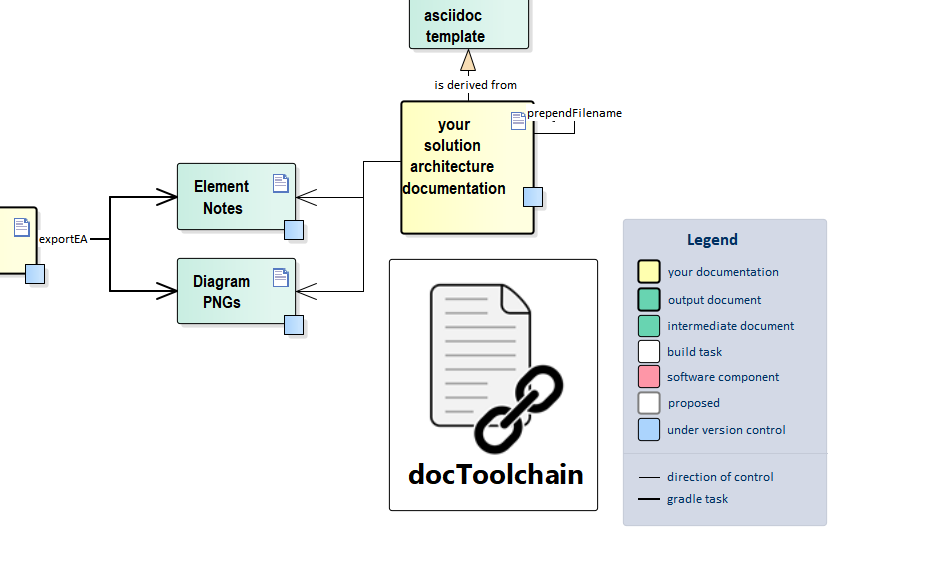

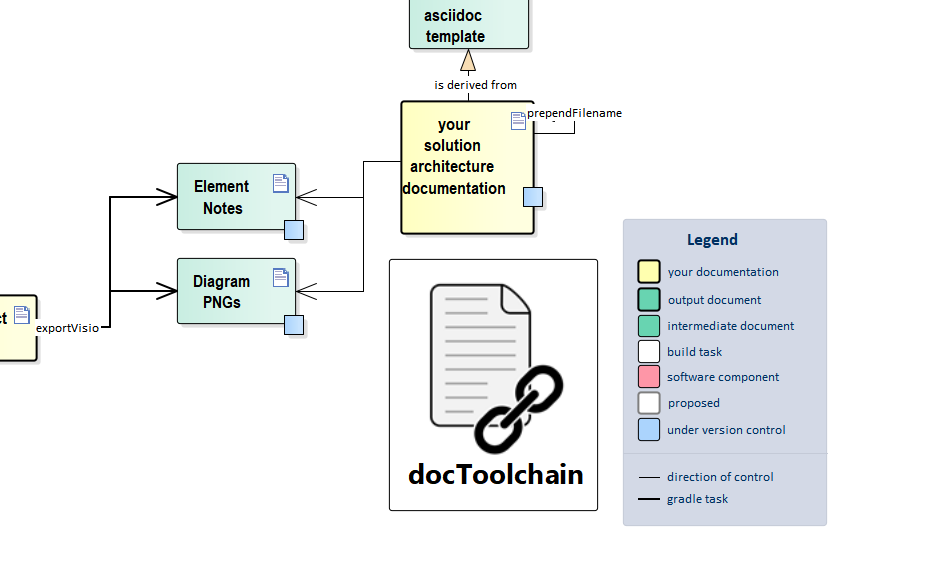

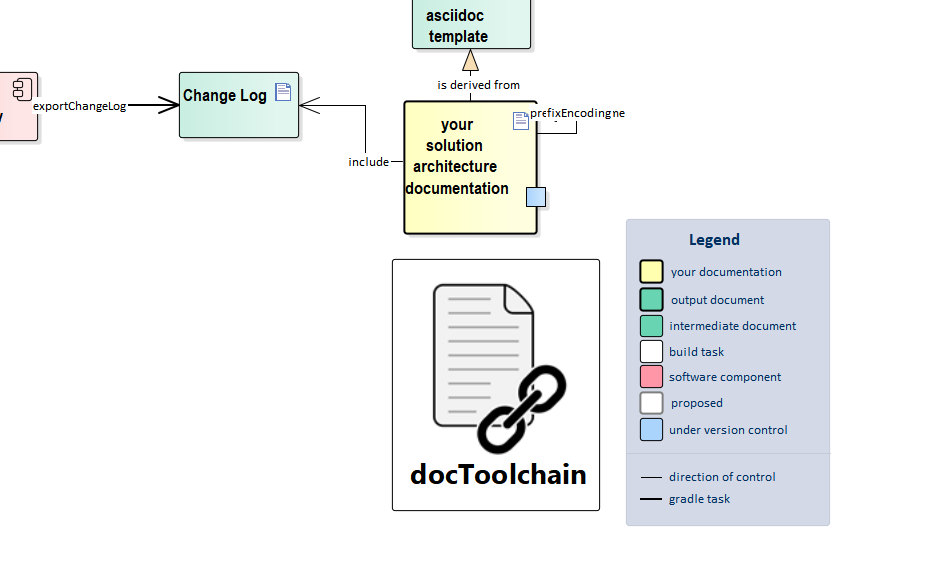

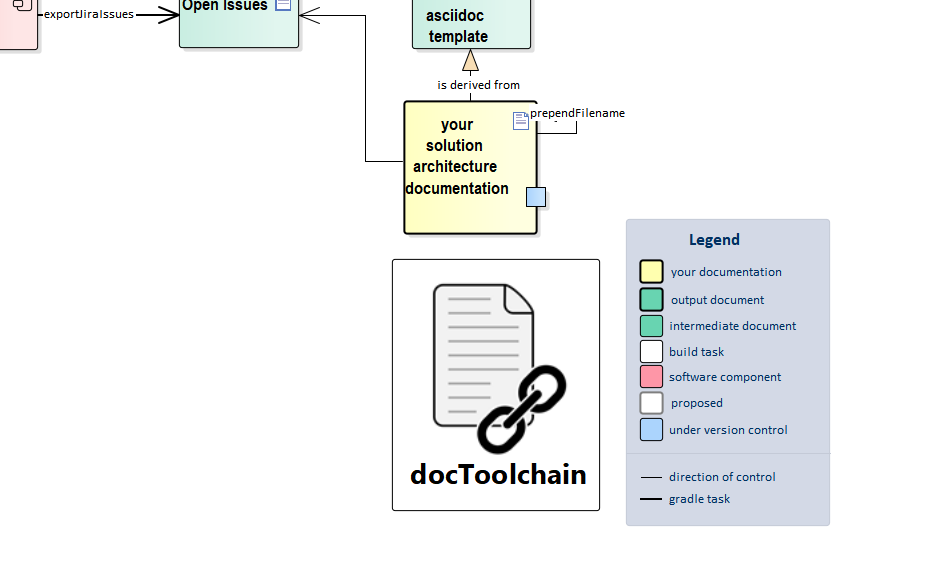

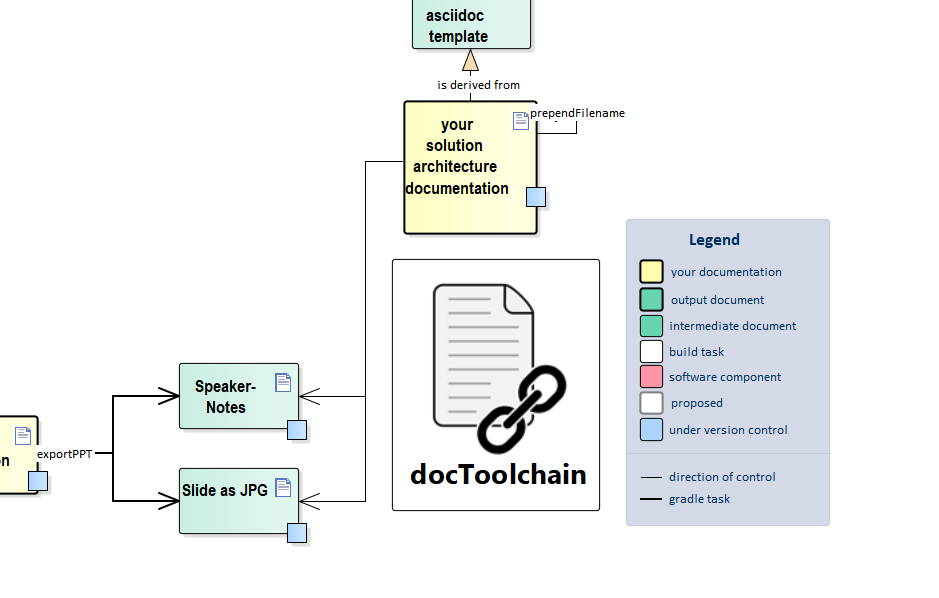

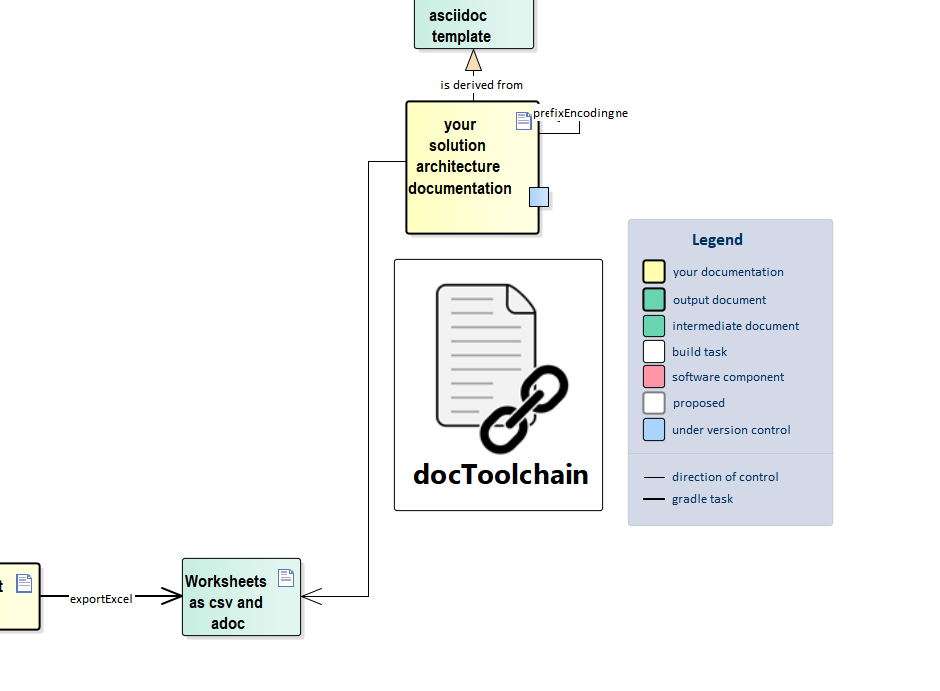

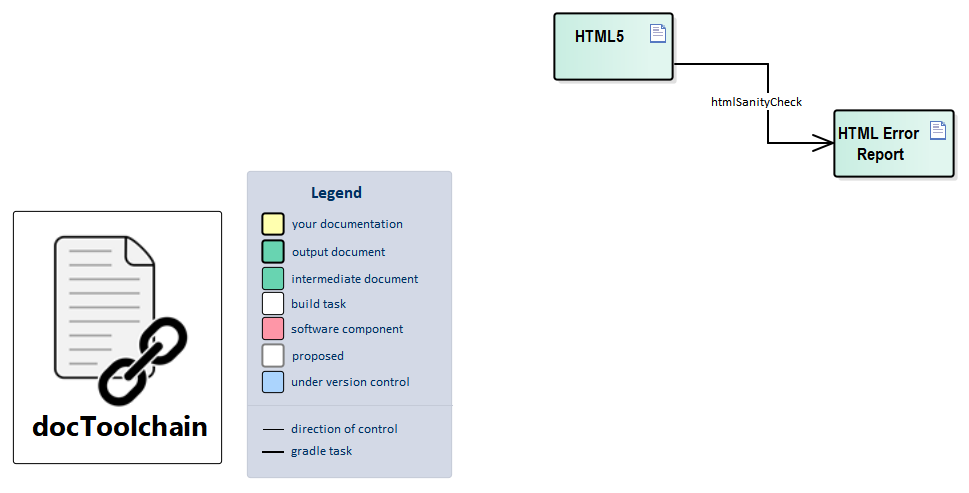

The following picture gives an overview of the whole build system:

3.1. Conventions

There are some simple naming conventions for the tasks. They might be confusing at first and that’s why they are explained here.

3.1.1. generateX

render would have been another good prefix, since these tasks use the plain AsciiDoctor functionality to render the source to a given format.

3.1.2. exportX

These tasks export images and AsciiDoc snippets from other systems or file formats. The resulting artifacts can then be included from your main sources.

What’s different from the generateX tasks is that you don’t need to export with each build.

It is also likely that you have to put the resulting artifacts under version control because the tools needed for the export (like Sparx Enterprise Architect or MS PowerPoint) are likely to be not available on a build server or on another contributor’s machine.

3.1.3. convertToX

These tasks take the output from AsciiDoctor and convert it (through other tools) to the target format. This results in a dependency on a generateX task and another external tool (currently pandoc).

3.1.4. publishToX

These tasks not only convert your documents but also deploy/publish/move them to a remote system — currently Confluence. This means that the result is likely to be visible immediately to others.

3.2. generateHTML

3 minutes to read

This is the standard AsciiDoctor generator which is supported out of the box.

The result is written to build/html5.

The HTML files need the images folder to be in the same directory to display correctly.

If you would like to have a single-file HTML as result, you can configure AsciiDoctor to store the images inline as data-uri.Just set :data-uri: in the config of your AsciiDoc file.But be warned - such a file can become very big easily and some browsers might get into trouble rendering them. https://rdmueller.github.io/single-file-html/ |

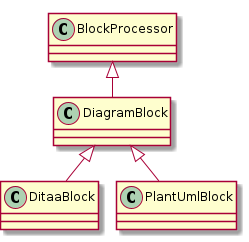

3.2.1. Text based Diagrams

For docToolchain, it is configured to use the asciidoctor-diagram plugin which is used to create PlantUML diagrams.

The plugin also supports a bunch of other text based diagrams, but PlantUML is the most used.

To use it, just specify your PlantUML code like this:

.example diagram

[plantuml, "{plantUMLDir}demoPlantUML", png] (1)

----

class BlockProcessor

class DiagramBlock

class DitaaBlock

class PlantUmlBlock

BlockProcessor <|-- DiagramBlock

DiagramBlock <|-- DitaaBlock

DiagramBlock <|-- PlantUmlBlock

----

| 1 | The element of this list specifies the diagram tool plantuml to be used.The second element is the name of the image to be created and the third specifies the image type. |

The {plantUMLDir} ensures that PlantUML also works for the generatePDF task.

Without it, generateHTML works fine, but the PDF will not contain the generated images.

|

| Make sure to specify a unique image name for each diagram, otherwise they will overwrite each other. |

The above example renders as

If you want to control the size of the generated diagram in the output, you can configure the "width" attribute (in pixels) or "scale" attribute (floating point ratio) passed to asciidoctor-diagram. For example, if you take the example diagram above and change the declaration to one of the below versions

[plantuml, target="{plantUMLDir}demoPlantUMLWidth", format=png, width=250]

# rest of the diagram definition

[plantuml, target="{plantUMLDir}demoPlantUMLScale", format=png, scale=0.75]

# rest of the diagram definition

it will render like this:

PlantUML needs Graphviz dot installed to work.

If you can’t install it, you can use the Java based version of the dot library.

Just add !pragma graphviz_dot smetana as the first line of your diagram definition.

This is still an experimental feature, but already works quite well!https://rdmueller.github.io/plantuml-without-graphviz/ |

3.2.2. Source

task generateHTML (

type: AsciidoctorTask,

group: 'docToolchain',

description: 'use html5 as asciidoc backend') {

attributes (

'plantUMLDir' : file("${docDir}/${config.outputPath}/html5").toURI().relativize(new File("${docDir}/${config.outputPath}/html5/plantUML/").toURI()).getPath()

)

onlyIf {

!sourceFiles.findAll {

'html' in it.formats

}.empty

}

// specify output folder explicitly to avoid cleaning targetDir from other generated content

outputDir = file(targetDir + '/html5/')

separateOutputDirs(false)

sources {

sourceFiles.findAll {

'html' in it.formats

}.each {

include it.file

println it.file

}

}

backends = ['html5']

}3.3. fixEncoding

1 minute to read

Whenever Asciidoctor has to process a file which is not UTF-8 encoded, the underlying Ruby tries to read it and throws an error like this:

asciidoctor: FAILED: /home/demo/test.adoc: Failed to load AsciiDoc document - invalid byte sequence in UTF-8

Unfortunately, it is hard to find the wrong encoded file if a lot of includes:: are used - Asciidoctor only shows the name of the main document.

| This is not a problem of Asciidoctor, but of the underlying ruby interpreter. |

This target crawls through all *.ad and *.adoc files and checks their encoding.

If it encounters a file which is not UTF-8 encoded, it will rewrite it with the UTF-8 encoding.

import groovy.util.*

import static groovy.io.FileType.*

task fixEncoding(

description: 'finds and converts non UTF-8 adoc files to UTF-8',

group: 'docToolchain helper',

) {

doLast {

File sourceFolder = new File("${docDir}/${inputPath}")

println("sourceFolder: " + sourceFolder.canonicalPath)

sourceFolder.traverse(type: FILES) { file ->

if (file.name ==~ '^.*(ad|adoc|asciidoc)$') {

CharsetToolkit toolkit = new CharsetToolkit(file);

// guess the encoding

def guessedCharset = toolkit.getCharset().toString().toUpperCase();

if (guessedCharset!='UTF-8') {

def text = file.text

file.write(text, "utf-8")

println(" converted ${file.name} from '${guessedCharset}' to 'UFT-8'")

}

}

}

}

}3.4. prependFilename

1 minute to read

When Asciidoctor renders a file, the file context only knows the name of the top-level AsciiDoc file but an include file doesn’t know that it is being included. It will simply get the name of the master file and has no chance to get his own names as attribute.

This task simply crawls through all AsciiDoc files and prepends the name of the current file like this:

:filename: manual/03_task_prependFilename.adoc

This way, each file can get its own file name. This enables features like the inclusion of file contributors (see exportContributors-task).

The task skips all files named config.*, _config.*, feedback.* and _feedback.*.

|

import static groovy.io.FileType.*

task prependFilename(

description: 'crawls through all AsciiDoc files and prepends the name of the current file',

group: 'docToolchain helper',

) {

doLast {

File sourceFolder = new File("${docDir}/${inputPath}")

println("sourceFolder: " + sourceFolder.canonicalPath)

sourceFolder.traverse(type: FILES) { file ->

if (file.name ==~ '^.*(ad|adoc|asciidoc)$') {

if (file.name.split('[.]')[0] in ["feedback", "_feedback", "config", "_config"]) {

println "skipped "+file.name

} else {

def text = file.getText('utf-8')

def name = file.canonicalPath - sourceFolder.canonicalPath

name = name.replace("\\", "/").replaceAll("^/", "")

if (text.contains(":filename:")) {

text = text.replaceAll(":filename:.*", ":filename: $name")

println "updated "+name

} else {

text = ":filename: $name\n" + text

println "added "+name

}

file.write(text,'utf-8')

}

}

}

}

}3.5. collectIncludes

2 minutes to read

This tasks crawls through your whole project looking for AsciiDoc files with a certain name pattern. It then creates an AsciiDoc file which just includes all files found.

When you create modular documentation, most includes are static. For example, the arc42-template has 12 chapters and a master template in which those 12 chapters are included.

But when you work with dynamic modules like ADRs - Architecture Decision Records - you create those files on the fly.

Maybe not even within your /src/docs folder but right beside the code file for which you wrote the ADR.

In order to include these files in your documentation, you now would have to add the file with its whole relative path to one of your AsciiDoc files.

This task will handle it for you!

Just stick with your files names to the pattern ^[A-Z]{3,}[-\_].\* (it begins with at last three upper case letters and a dash/underscore) and this task will collect this file and write it to your build folder.

You only have to include this generated file from within your documentation.

If you provide templates for the documents these templates are skipped if the name matches the pattern ^.*[-_][tT]emplate[-\_].*.

Example:

You have a file called

/src/java/yourCompany/domain/books/ADR-1-whyWeUseTheAISINInsteadOFISBN.adoc

The task will collect this file and write another file called

/build/docs/_includes/ADR_includes.adoc

Which will look like this:

include::../../../src/java/yourCompany/domain/books/ADR-1-whyWeUseTheAISINInsteadOFISBN.adoc[]

Obviously, you get the most benefit if you not only have one ADR file, but several ones which get collected. 😎

You then include these files in your main documentation by using a single include:

include::{targetDir}/docs/_includes/ADR_includes.adoc[]

import static groovy.io.FileType.*

import java.security.MessageDigest

task collectIncludes(

description: 'collect all ADRs as includes in one file',

group: 'docToolchain'

) {

doFirst {

new File(targetDir, '_includes').mkdirs()

}

doLast {

//let's search the whole project for files, not only the docs folder

//could be a problem with node projects :-)

//running as subproject? set scandir to main project

if (project.name!=rootProject.name && scanDir=='.') {

scanDir = project(':').projectDir.path

}

if (docDir.startsWith('.')) {

docDir = file(new File(projectDir, docDir).canonicalPath)

}

logger.info "docToolchain> docDir: ${docDir}"

logger.info "docToolchain> scanDir: ${scanDir}"

if (scanDir.startsWith('.')) {

scanDir = file(new File(docDir, scanDir).canonicalPath)

} else {

scanDir = file(new File(scanDir, "").canonicalPath)

}

logger.info "docToolchain> scanDir: ${scanDir}"

logger.info "docToolchain> includeRoot: ${includeRoot}"

if (includeRoot.startsWith('.')) {

includeRoot = file(new File(docDir, includeRoot).canonicalPath)

}

logger.info "docToolchain> includeRoot: ${includeRoot}"

File sourceFolder = scanDir

println "sourceFolder: " + sourceFolder.canonicalPath

def collections = [:]

sourceFolder.traverse(type: FILES) { file ->

if (file.name ==~ '^[A-Z]{3,}[-_].*[.](ad|adoc|asciidoc)$') {

def type = file.name.replaceAll('^([A-Z]{3,})[-_].*$','\$1')

if (!collections[type]) {

collections[type] = []

}

println "file: " + file.canonicalPath

def fileName = (file.canonicalPath - scanDir.canonicalPath)[1..-1]

if (file.name ==~ '^.*[Tt]emplate.*$') {

println "ignore template file: " + fileName

} else {

if (file.name ==~ '^.*[A-Z]{3,}_includes.adoc$') {

println "ignore generated _includes files: " + fileName

} else {

if ( fileName.startsWith('docToolchain') || fileName.replace("\\", "/").matches('^.*/docToolchain/.*$')) {

//ignore docToolchain as submodule

} else {

println "include corrected file: " + fileName

collections[type] << fileName

}

}

}

}

}

println "targetDir - docDir: " + (targetDir - docDir)

println "targetDir - includeRoot: " + (targetDir - includeRoot)

def pathDiff = '../' * ((targetDir - docDir)

.replaceAll('^/','')

.replaceAll('/$','')

.replaceAll("[^/]",'').size()+1)

println "pathDiff: " + pathDiff

collections.each { type, fileNames ->

if (fileNames) {

def outFile = new File(targetDir+'/_includes', type + '_includes.adoc')

println outFile.canonicalPath-sourceFolder.canonicalPath

outFile.write("// this is autogenerated\n")

fileNames.sort().each { fileName ->

outFile.append ("include::../"+pathDiff+fileName.replace("\\", "/")+"[]\n\n")

}

}

}

}

}3.6. generatePDF

2 minutes to read

This task makes use of the asciidoctor-pdf plugin to render your documents as a pretty PDF file.

The file will be written to build/pdf.

| The used plugin is still in alpha status, but the results are already quite good. If you want to use another way to create a PDF, use PhantomJS for instance and script it. |

The PDF is generated directly from your AsciiDoc sources without the need of an intermediate format or other tools. The result looks more like a nicely rendered book than a print-to-pdf HTML page.

It is very likely that you need to "theme" you PDF - change colors, fonts, page header, and footer.

This can be done by creating a custom-theme.yml file.

As a starting point, copy the file src/docs/pdfTheme/custom-theme.yml from docToolchain to your project and reference it from your main .adoc`file via setting the `:pdf-stylesdir:.

For instance, insert

:pdf-stylesdir: ../pdfTheme

at the top of your document to reference the custom-theme.yml from the /pdfTheme folder.

Documentation on how to modify a theme can be found in the asciidoctor-pdf theming guide.

| Blog-Post: Beyond HTML |

3.6.1. Source

task generatePDF (

type: AsciidoctorTask,

group: 'docToolchain',

description: 'use pdf as asciidoc backend') {

attributes (

'plantUMLDir' : file("${docDir}/${config.outputPath}/pdf/images/plantUML/").path

)

onlyIf {

!sourceFiles.findAll {

'pdf' in it.formats

}.empty

}

sources {

sourceFiles.findAll {

'pdf' in it.formats

}.each {

include it.file

}

}

backends = ['pdf']

}3.7. generateDocbook

1 minute to read

This is only a helper task - it generates the intermediate format for convertToDocx and convertToEpub.

3.7.1. Source

task generateDocbook (

type: AsciidoctorTask,

group: 'docToolchain',

description: 'use docbook as asciidoc backend') {

onlyIf {

!sourceFiles.findAll {

'docbook' in it.formats

}.empty

}

sources {

sourceFiles.findAll {

'docbook' in it.formats

}.each {

include it.file

}

}

backends = ['docbook']

}3.8. generateDeck

1 minute to read

This task makes use of the asciidoctor-reveal.js backend to render your documents into a HTML based presentation.

This task is best used together with the exportPPT task. It creates a PowerPoint presentation and enriches it with reveal.js slide definitions in AsciiDoc within the speaker notes.

3.8.1. Source

task generateDeck (

type: AsciidoctorTask,

group: 'docToolchain',

description: 'use revealJs as asciidoc backend to create a presentation') {

attributes (

'idprefix': 'slide-',

'idseparator': '-',

'docinfo1': '',

'revealjs_theme': 'black',

'revealjs_progress': 'true',

'revealjs_touch': 'true',

'revealjs_hideAddressBar': 'true',

'revealjs_transition': 'linear',

'revealjs_history': 'true',

'revealjs_slideNumber': 'true'

)

options template_dirs : [new File(new File (projectDir,'/resources/asciidoctor-reveal.js'),'templates').absolutePath ]

onlyIf {

!sourceFiles.findAll {

'revealjs' in it.formats

}.empty

}

sources {

sourceFiles.findAll {

'revealjs' in it.formats

}.each {

include it.file

}

}

outputDir = file(targetDir+'/decks/')

resources {

from('resources') {

include 'reveal.js/**'

}

from(sourceDir) {

include 'images/**'

}

into("")

logger.info "${docDir}/${config.outputPath}/images"

}

}3.9. publishToConfluence

7 minutes to read

This target takes the generated HTML file, splits it by headline and pushes it to Confluence. This enables you to use the docs-as-code approach while getting feedback from non-techies through Confluence comments. And it fulfills the "requirement" of "… but all documentation is in Confluence".

Special features:

-

[source]-blocks are converted to code-macro blocks in confluence. -

only pages and images which have changed between task runs are really published and hence only for those changes notifications are sent to watchers. This is quite important - otherwise watchers are easily annoyed by too many notifications.

-

:keywords:Keywords are attached as labels to every generated Confluence page. The rules for page labels should be kept in mind. See https://confluence.atlassian.com/doc/add-remove-and-search-for-labels-136419.html. Several keywords are allowed. They must be separated by comma, e.g.:keywords: label_1, label-2, label3, …. Labels (keywords) must not contain a space character! Use '_' or '-'.

| code-macro-blocks in confluence render an error if the language attribute contains an unknown language. See https://confluence.atlassian.com/doc/code-block-macro-139390.html for a list of valid language and how to add further languages. |

3.9.1. Configuration

We tried to make the configuration self-explaining, but there are always some note to add.

- ancestorId

-

this is the page ID of the parent page to which you want your docs to be published. Go to this page, click on edit and the needed ID will show up in the URL. Specify the ID as string within the config file.

- api

-

for cloud instances,

[context]iswiki - preambleTitle

-

the title of the page containing the preamble (everything the first second level heading). Default is 'arc42'

- disableToC

-

This boolean configuration define if the Table of Content (ToC) is disabled from the page once uploaded in confluence. (it is false by default, so the ToC is active)

- pagePrefix/pageSuffix

-

Confluence can’t handle two pages with the same name. Moreover, the script matches pages regardless of the case. It will thus refuse to replace a page whose title only differs in case with an existing page. So you should create a new confluence space for each piece of larger documentation. If you are restricted and can’t create new spaces, you can use this

pagePrefix/pageSuffixto define a prefix/suffix for this doc so that it doesn’t conflict with other page names. - credentials

-

Use username and password or even better username and api-token. You can create new API-tokens in your profile. To avoid having your password or api-token versioned through git, you can store it outside of this configuration as environment variable or in another file - the key here is that the config file is a groovy script. e.g. you can do things like

credentials = "user:${new File("/home/me/apitoken").text}".bytes.encodeBase64().toString()

To simplify the injection of credentials from external sources there is a fallback. Should you leave the credentials field empty,

the variables confluenceUser and confluencePassword from the build environment will be used for authentication. You can set these through any means

allowed by gradle like the gradle.properties file in the project or your home directory, environment variables or command-line flags.

For all ways to set these variables, have a look at the gradle manual.

- apikey

-

In cases where you have to use full user authorization because of internal confluence permission handling, you need to add the API-token in addition to the credentials. The API-token cannot be added to the credentials as it is used for user and password exchange. Therefore the API-token can be added as parameter apikey, which makes the addition of the token as a separate header field with key:

keyIdand value ofapikey. Example including storing of the real value outside this configuration:apikey = "${new File("/home/me/apitoken").text}". - extraPageContent

-

If you need to prefix your pages with a warning that this is generated content - this is the right place.

- enableAttachments

-

If value is set to true, your links to local file references will be uploaded as attachments. The current implementation only supports a single folder. This foldername will be used as a prefix to validate if your file should be uploaded or not. In case you enable this feature, and use a folder which starts with "attachment*", an adaption of this prefix is required.

docToolchain will crawl through all your generated <a href>-Tags and compare the defined attachmentPrefix with the start of the href-attribute.

If it matches, the referenced file will be uploaded as attachment.

So, for each document folder, which references attachments, you will need a folder called ${attachmentPrefix} to store your attachments.

As alternative, you can set attachmentPrefix to ./ and ensure that all your link: macros which reference attachments, start with ./.

In this case make sure that you don’t link to other pages starting with ./, otherwise, these will also be uploaded as attachments.

Example:

All files to attach will require to be linked inside the document.

link:attachement/myfolder/myfile.json[My API definition]

- attachmentPrefix

-

The expected foldername of your output dir. Default:

attachment - jiraServerId

-

the jira server id your confluence instance is connected to. If value is set, all anchors pointing to a jira ticket will be replaced by the confluence jira macro. To function properly jiraRoot has to be configured (see exportJiraIssues).

- proxy

-

If you need to provide a proxy to access Confluence, you may set a map with keys

host(e.g.'my.proxy.com'),port(e.g.'1234') andschema(e.g.'http') of your proxy. - useOpenapiMacro

-

If this option is present and equal to "confluence-open-api" then any source block marked with class openapi will be wrapped in Elitesoft Swagger Editor macro: (see Elitesoft Swagger Editor)

For backward compatibility: If this option is present and equal totrue, then again the Elitesoft Swagger

[source.openapi,yaml]

----

include::myopeanapi.yaml[]

----If this option is present and equal to "open-api" then any source block marked with class openapi will be wrapped in Open API Documentation for Confluence macro: (see Open API Documentation for Confluence). A download source (yaml) button is shown by default.

Using the plugin can be handled on different ways.

-

copy/paste the content of the YAML file to the plugin without linking to the origin source by using the url to the YAML file

[source.openapi,yaml]

----

\include::https://my-domain.com/path-to-yaml[]

-----

copy/paste the content of the YAML file to the plugin without linking to the origin source by using a YAML file in your project structure:

[source.openapi,yaml]

----

\include::my-yaml-file.yaml[]

-----

create a link between the plugin and the YAML file without copying the content into the plugin. The advantage following this way is that even in case the API specification is changed without re-generating the documentation, the new version of the configuration is used in Confluence.

[source.openapi,yaml,role="url:https://my-domain.com/path-to-yaml"]

----

\include::https://my-domain.com/path-to-yaml[]

----//Configureation for publishToConfluence

confluence = [:]

// 'input' is an array of files to upload to Confluence with the ability

// to configure a different parent page for each file.

//

// Attributes

// - 'file': absolute or relative path to the asciidoc generated html file to be exported

// - 'url': absolute URL to an asciidoc generated html file to be exported

// - 'ancestorName' (optional): the name of the parent page in Confluence as string;

// this attribute has priority over ancestorId, but if page with given name doesn't exist,

// ancestorId will be used as a fallback

// - 'ancestorId' (optional): the id of the parent page in Confluence as string; leave this empty

// if a new parent shall be created in the space

// - 'preambleTitle' (optional): the title of the page containing the preamble (everything

// before the first second level heading). Default is 'arc42'

//

// The following four keys can also be used in the global section below

// - 'spaceKey' (optional): page specific variable for the key of the confluence space to write to

// - 'createSubpages' (optional): page specific variable to determine whether ".sect2" sections shall be split from the current page into subpages

// - 'pagePrefix' (optional): page specific variable, the pagePrefix will be a prefix for the page title and it's sub-pages

// use this if you only have access to one confluence space but need to store several

// pages with the same title - a different pagePrefix will make them unique

// - 'pageSuffix' (optional): same usage as prefix but appended to the title and it's subpages

// only 'file' or 'url' is allowed. If both are given, 'url' is ignored

confluence.with {

input = [

[ file: "build/docs/html5/arc42-template-de.html" ],

]

// endpoint of the confluenceAPI (REST) to be used

// verfiy that you got the correct endpoint by browsing to

// https://[yourServer]/[context]/rest/api/user/current

// you should get a valid json which describes your current user

// a working example is https://arc42-template.atlassian.net/wiki/rest/api/user/current

api = 'https://[yourServer]/[context]/rest/api/'

// Additionally, spaceKey, createSubpages, pagePrefix and pageSuffix can be globally defined here. The assignment in the input array has precedence

// the key of the confluence space to write to

spaceKey = 'asciidoc'

// the title of the page containing the preamble (everything the first second level heading). Default is 'arc42'

preambleTitle = ''

// variable to determine whether ".sect2" sections shall be split from the current page into subpages

createSubpages = false

// the pagePrefix will be a prefix for each page title

// use this if you only have access to one confluence space but need to store several

// pages with the same title - a different pagePrefix will make them unique

pagePrefix = ''

pageSuffix = ''

/*

WARNING: It is strongly recommended to store credentials securely instead of commiting plain text values to your git repository!!!

Tool expects credentials that belong to an account which has the right permissions to to create and edit confluence pages in the given space.

Credentials can be used in a form of:

- passed parameters when calling script (-PconfluenceUser=myUsername -PconfluencePass=myPassword) which can be fetched as a secrets on CI/CD or

- gradle variables set through gradle properties (uses the 'confluenceUser' and 'confluencePass' keys)

Often, same credentials are used for Jira & Confluence, in which case it is recommended to pass CLI parameters for both entities as

-Pusername=myUser -Ppassword=myPassword

*/

//optional API-token to be added in case the credentials are needed for user and password exchange.

//apikey = "[API-token]"

// HTML Content that will be included with every page published

// directly after the TOC. If left empty no additional content will be

// added

// extraPageContent = '<ac:structured-macro ac:name="warning"><ac:parameter ac:name="title" /><ac:rich-text-body>This is a generated page, do not edit!</ac:rich-text-body></ac:structured-macro>

extraPageContent = ''

// enable or disable attachment uploads for local file references

enableAttachments = false

// default attachmentPrefix = attachment - All files to attach will require to be linked inside the document.

// attachmentPrefix = "attachment"

// Optional proxy configuration, only used to access Confluence

// schema supports http and https

// proxy = [host: 'my.proxy.com', port: 1234, schema: 'http']

// Optional: specify which Confluence OpenAPI Macro should be used to render OpenAPI definitions

// possible values: ["confluence-open-api", "open-api", true]. true is the same as "confluence-open-api" for backward compatibility

// useOpenapiMacro = "confluence-open-api"

}3.9.2. CSS Styling

Some AsciiDoctor features depend on particular CSS style definitions. Unless these styles are defined, some formatting that is present in the HTML version will not be represented when published to Confluence.

-

Log in to Confluence as a space admin

-

Go to the desired space

-

Select "Space tools", "Look and Feel", "Stylesheet"

-

Click "Edit", enter the desired style definitions, and click "Save"

The default style definitions can be found in the AsciiDoc project as asciidoctor-default.css. Note that you likely do NOT want to include the whole thing, as some of the definitions are likely to disrupt Confluence’s layout.

The following style definitions are believed to be Confluence-compatible, and enable use of the built-in roles (big/small, underline/overline/line-through, COLOR/COLOR-background for the sixteen HTML color names):

.big{font-size:larger}

.small{font-size:smaller}

.underline{text-decoration:underline}

.overline{text-decoration:overline}

.line-through{text-decoration:line-through}

.aqua{color:#00bfbf}

.aqua-background{background-color:#00fafa}

.black{color:#000}

.black-background{background-color:#000}

.blue{color:#0000bf}

.blue-background{background-color:#0000fa}

.fuchsia{color:#bf00bf}

.fuchsia-background{background-color:#fa00fa}

.gray{color:#606060}

.gray-background{background-color:#7d7d7d}

.green{color:#006000}

.green-background{background-color:#007d00}

.lime{color:#00bf00}

.lime-background{background-color:#00fa00}

.maroon{color:#600000}

.maroon-background{background-color:#7d0000}

.navy{color:#000060}

.navy-background{background-color:#00007d}

.olive{color:#606000}

.olive-background{background-color:#7d7d00}

.purple{color:#600060}

.purple-background{background-color:#7d007d}

.red{color:#bf0000}

.red-background{background-color:#fa0000}

.silver{color:#909090}

.silver-background{background-color:#bcbcbc}

.teal{color:#006060}

.teal-background{background-color:#007d7d}

.white{color:#bfbfbf}

.white-background{background-color:#fafafa}

.yellow{color:#bfbf00}

.yellow-background{background-color:#fafa00}3.9.3. Source

task publishToConfluence(

description: 'publishes the HTML rendered output to confluence',

group: 'docToolchain'

) {

doLast {

logger.info("docToolchain> docDir: "+docDir)

binding.setProperty('config',config)

binding.setProperty('docDir',docDir)

evaluate(new File(projectDir, 'scripts/asciidoc2confluence.groovy'))

}

}3.10. convertToDocx

-

Needs pandoc installed

-

Please make sure that 'docbook' and 'docx' are added to the inputFiles formats in Config.groovy

-

Optional: you can specify a reference doc file with custom stylesheets (see task createReferenceDoc)

1 minute to read

| Blog-Post: Render AsciiDoc to docx (MS Word) |

3.10.1. Source

task convertToDocx (

group: 'docToolchain',

description: 'converts file to .docx via pandoc. Needs pandoc installed.',

type: Exec

) {

// All files with option `docx` in config.groovy is converted to docbook and then to docx.

def sourceFilesDocx = sourceFiles.findAll { 'docx' in it.formats }

sourceFilesDocx.each {

def sourceFile = it.file.replace('.adoc', '.xml')

def targetFile = sourceFile.replace('.xml', '.docx')

workingDir "$targetDir/docbook"

executable = "pandoc"

if(referenceDocFile?.trim()) {

args = ["-r","docbook",

"-t","docx",

"-o","../docx/$targetFile",

"--reference-doc=${docDir}/${referenceDocFile}",

sourceFile]

} else {

args = ["-r","docbook",

"-t","docx",

"-o","../docx/$targetFile",

sourceFile]

}

}

doFirst {

new File("$targetDir/docx/").mkdirs()

}

}3.11. createReferenceDoc

-

Needs pandoc installed

1 minute to read

Creates a reference docx file used by pandoc during conversion from docbook to docx. You can edit this file to use your prefered styles in task convertToDocx.

Please make sure that 'referenceDocFile' property is set to your custom reference file in Config.groovy

inputPath = '.'

// use a style reference file in the input path for conversion from docbook to docx

referenceDocFile = "${inputPath}/my-ref-file.docx"|

The contents of the reference docx are ignored, but its stylesheets and document properties (including margins, page size, header, and footer) are used in the new docx. See Pandoc User’s Guide: Options affecting specific writers (--reference-doc) If you have problems with changing the default table style: see https://github.com/jgm/pandoc/issues/3275. |

3.11.1. Source

task createReferenceDoc (

group: 'docToolchain helper',

description: 'creates a docx file to be used as a format style reference in task convertToDocx. Needs pandoc installed.',

type: Exec

) {

workingDir "$docDir"

executable = "pandoc"

args = ["-o", "${docDir}/${referenceDocFile}",

"--print-default-data-file",

"reference.docx"]

doFirst {

if(!(referenceDocFile?.trim())) {

throw new GradleException("Option `referenceDocFile` is not defined in config.groovy or has an empty value.")

}

}

}3.12. convertToEpub

1 minute to read

Dependency: generateDocbook

This task uses pandoc to convert the DocBook output from AsciiDoctor to ePub. This way, you can read your documentation in a convenient way on an eBook-reader.

The resulting file can be found in build/docs/epub

| Blog-Post: Turn your Document into an Audio-Book |

3.12.1. Source

task convertToEpub (

group: 'docToolchain',

description: 'converts file to .epub via pandoc. Needs pandoc installed.',

type: Exec

) {

// All files with option `epub` in config.groovy is converted to docbook and then to epub.

def sourceFilesEpub = sourceFiles.findAll { 'epub' in it.formats }

sourceFilesEpub.each {

def sourceFile = it.file.replace('.adoc', '.xml')

def targetFile = sourceFile.replace('.xml', '.epub')

workingDir "$targetDir/docbook"

executable = "pandoc"

args = ['-r','docbook',

'-t','epub',

'-o',"../epub/$targetFile",

sourceFile]

}

doFirst {

new File("$targetDir/epub/").mkdirs()

}

}3.13. exportEA

| Currently this feature is WINDOWS-only. See related issue |

4 minutes to read

3.13.1. Configuration

By default no special configuration is necessary. But, to be more specific on the project and its packages to be used for export, six optional parameter configurations are available. The parameters can be used independently from each other. A sample how to edit your projects Config.groovy is given in the 'Config.groovy' of the docToolchain project itself.

- connection

-

Set the connection to a certain project or comment it out to use all project files inside the src folder or its child folder.

- packageFilter

-

Add one or multiple packageGUIDs to be used for export. All packages are analysed, if no packageFilter is set.

- exportPath

-

Relative path to base 'docDir' to which the diagrams and notes are to be exported. Default: "src/docs". Example: docDir = 'D:\work\mydoc\' ; exportPath = 'src/pdocs' ; Images will be exported to 'D:\work\mydoc\src\pdocs\images\ea', Notes will be exported to 'D:\work\mydoc\src\pdocs\ea',

- searchPath

-

Relative path to base 'docDir', in which Enterprise Architect project files are searched Default: "src/docs". Example: docDir = 'D:\work\mydoc\' ; exportPath = 'src/projects' ; Lookup for eap and eapx files starts in 'D:\work\mydoc\src\projects' and goes down the folder structure. Note: In case parameter 'connection' is already defined, the searchPath value is used, too. exportEA starts opening the database parameter 'connection' first and looks afterwards for further project files either in the searchPath (if set) or in the docDir folder of the project.

- glossaryAsciiDocFormat

-

Depending on this configuration option, the EA project glossary is exported. If it is not set or an empty string, no glossary is exported. The glossaryAsciiDocFormat string is used to format each glossary entry in a certain asciidoc format. Following placeholder for the format string are defined: ID, TERM, MEANING, TYPE. One or many of these placeholder can be used by the output format.

Example: A valid output format to include the glossary as a flat list. The file can be included where needed in the documentation.

glossaryAsciiDocFormat = "TERM:: MEANING"

Other format strings can be used to include it as a table row. The glossary is sorted by terms in alphabetical order.

- glossaryTypes

-

This parameter is used in case a glossaryAsciiDocFormat is defined, otherwise it is not evaluated. It is used to filter for certain types. If the glossaryTypes list is empty, all entries will be used. Example: glossaryTypes = ["Business", "Technical"]

- diagramAttributes

-

Beside the diagram image, an EA diagram offers several useful attributes which could be required in the resulting document. If set, the string is used to create and store the diagram attributes to be included in the document. These placeholders are defined and filled with the diagram attributes, if used in the diagramAttributes string:

%DIAGRAM_AUTHOR%,

%DIAGRAM_CREATED%,

%DIAGRAM_GUID%,

%DIAGRAM_MODIFIED%,

%DIAGRAM_NAME%,

%DIAGRAM_NOTES%,

%NEWLINE%

Example: diagramAttributes = "Last modification: %DIAGRAM_MODIFIED%"

You can add the string %NEWLINE% where a line break shall be added.

The resulting text is stored beside the diagram image using same path and file named, but different file extension (.ad). This can included in the document if required. If diagramAttributes is not set or string is empty, no file is written. - additionalOptions

-

This parameter is used to define specific behavior of the export. Currently these options are supported:

KeepFirstDiagram- in case a diagrams are not names uniquely, the last diagram will be saved. If you want to prevent that diagrams are overwritten, add this parameter to additionalOptions.

3.13.2. Glossary export

By setting the glossaryAsciiDocFormat the glossary terms stored in the EA project is exported into a folder named 'glossary' below the configured exportPath. In case multiple EA projects were found for export, one glossary per project is exported. Each named with the projects GUID plus extension '.ad'. Each single file will be filtered (see glossaryTypes) and sorted in alphabetical order. In addition, a global glossary is created by using all single glossary files. This global file is named 'glossary.ad' and is also placed in the glossary folder. The global glossary is also filtered and sorted. In case there is one EA project only, the global glossary is written only.

3.13.3. Source

task exportEA(

dependsOn: [streamingExecute],

description: 'exports all diagrams and some texts from EA files',

group: 'docToolchain'

) {

doFirst {

}

doLast {

logger.info("docToolchain > exportEA: "+docDir)

logger.info("docToolchain > exportEA: "+mainConfigFile)

def configFile = new File(docDir, mainConfigFile)

def config = new ConfigSlurper().parse(configFile.text)

def scriptParameterString = ""

def exportPath = ""

def searchPath = ""

def glossaryPath = ""

def readme = """This folder contains exported diagrams or notes from Enterprise Architect.

Please note that these are generated files but reside in the `src`-folder in order to be versioned.

This is to make sure that they can be used from environments other than windows.

# Warning!

**The contents of this folder will be overwritten with each re-export!**

use `gradle exportEA` to re-export files

"""

if(!config.exportEA.connection.isEmpty()){

logger.info("docToolchain > exportEA: found "+config.exportEA.connection)

scriptParameterString = scriptParameterString + "-c \"${config.exportEA.connection}\""

}

if (!config.exportEA.packageFilter.isEmpty()){

def packageFilterToCreate = config.exportEA.packageFilter as List

logger.info("docToolchain > exportEA: package filter list size: "+packageFilterToCreate.size())

packageFilterToCreate.each { packageFilter ->

scriptParameterString = scriptParameterString + " -p \"${packageFilter}\""

}

}

if (!config.exportEA.exportPath.isEmpty()){

exportPath = new File(docDir, config.exportEA.exportPath).getAbsolutePath()

}

else {

exportPath = new File(docDir, 'src/docs').getAbsolutePath()

}

if (!config.exportEA.searchPath.isEmpty()){

searchPath = new File(docDir, config.exportEA.searchPath).getAbsolutePath()

}

else if (!config.exportEA.absoluteSearchPath.isEmpty()) {

searchPath = new File(config.exportEA.absoluteSearchPath).getAbsolutePath()

}

else {

searchPath = new File(docDir, 'src').getAbsolutePath()

}

scriptParameterString = scriptParameterString + " -d \"$exportPath\""

scriptParameterString = scriptParameterString + " -s \"$searchPath\""

logger.info("docToolchain > exportEA: exportPath: "+exportPath)

//remove old glossary files/folder if exist

new File(exportPath , 'glossary').deleteDir()

//set the glossary file path in case an output format is configured, other no glossary is written

if (!config.exportEA.glossaryAsciiDocFormat.isEmpty()) {

//create folder to store glossaries

new File(exportPath , 'glossary/.').mkdirs()

glossaryPath = new File(exportPath , 'glossary').getAbsolutePath()

scriptParameterString = scriptParameterString + " -g \"$glossaryPath\""

}

//configure additional diagram attributes to be exported

if (!config.exportEA.diagramAttributes.isEmpty()) {

scriptParameterString = scriptParameterString + " -da \"$config.exportEA.diagramAttributes\""

}

//configure additional diagram attributes to be exported

if (!config.exportEA.additionalOptions.isEmpty()) {

scriptParameterString = scriptParameterString + " -ao \"$config.exportEA.additionalOptions\""

}

//make sure path for notes exists

//and remove old notes

new File(exportPath , 'ea').deleteDir()

//also remove old diagrams

new File(exportPath , 'images/ea').deleteDir()

//create a readme to clarify things

new File(exportPath , 'images/ea/.').mkdirs()

new File(exportPath , 'images/ea/readme.ad').write(readme)

new File(exportPath , 'ea/.').mkdirs()

new File(exportPath , 'ea/readme.ad').write(readme)

//execute through cscript in order to make sure that we get WScript.echo right

logger.info("docToolchain > exportEA: parameters: " + scriptParameterString)

"%SystemRoot%\\System32\\cscript.exe //nologo ${projectDir}/scripts/exportEAP.vbs ${scriptParameterString}".executeCmd()

//the VB Script is only capable of writing iso-8859-1-Files.

//we now have to convert them to UTF-8

new File(exportPath, 'ea/.').eachFileRecurse { file ->

if (file.isFile()) {

println "exported notes " + file.canonicalPath

file.write(file.getText('iso-8859-1'), 'utf-8')

}

}

//sort, filter and reformat a glossary if an output format is configured

if (!config.exportEA.glossaryAsciiDocFormat.isEmpty()) {

def glossaryTypes

if (!config.exportEA.glossaryTypes.isEmpty()){

glossaryTypes = config.exportEA.glossaryTypes as List

}

new GlossaryHandler().execute(glossaryPath, config.exportEA.glossaryAsciiDocFormat, glossaryTypes);

}

}

} ' based on the "Project Interface Example" which comes with EA

' http://stackoverflow.com/questions/1441479/automated-method-to-export-enterprise-architect-diagrams

Dim EAapp 'As EA.App

Dim Repository 'As EA.Repository

Dim FS 'As Scripting.FileSystemObject

Dim projectInterface 'As EA.Project

Const ForAppending = 8

Const ForWriting = 2

' Helper

' http://windowsitpro.com/windows/jsi-tip-10441-how-can-vbscript-create-multiple-folders-path-mkdir-command

Function MakeDir (strPath)

Dim strParentPath, objFSO

Set objFSO = CreateObject("Scripting.FileSystemObject")

On Error Resume Next

strParentPath = objFSO.GetParentFolderName(strPath)

If Not objFSO.FolderExists(strParentPath) Then MakeDir strParentPath

If Not objFSO.FolderExists(strPath) Then objFSO.CreateFolder strPath

On Error Goto 0

MakeDir = objFSO.FolderExists(strPath)

End Function

' Replaces certain characters with '_' to avoid unwanted file or folder names causing errors or structure failures.

' Regular expression can easily be extended with further characters to be replaced.

Function NormalizeName(theName)

dim re : Set re = new regexp

re.Pattern = "[\\/\[\]\s]"

re.Global = True

NormalizeName = re.Replace(theName, "_")

End Function

Sub WriteNote(currentModel, currentElement, notes, prefix)

If (Left(notes, 6) = "{adoc:") Then

strFileName = Mid(notes,7,InStr(notes,"}")-7)

strNotes = Right(notes,Len(notes)-InStr(notes,"}"))

set objFSO = CreateObject("Scripting.FileSystemObject")

If (currentModel.Name="Model") Then

' When we work with the default model, we don't need a sub directory

path = objFSO.BuildPath(exportDestination,"ea/")

Else

path = objFSO.BuildPath(exportDestination,"ea/"&NormalizeName(currentModel.Name)&"/")

End If

MakeDir(path)

post = ""

If (prefix<>"") Then

post = "_"

End If

MakeDir(path&prefix&post)

set objFile = objFSO.OpenTextFile(path&prefix&post&strFileName&".ad",ForAppending, True)

name = currentElement.Name

name = Replace(name,vbCr,"")

name = Replace(name,vbLf,"")

if (Left(strNotes, 3) = vbCRLF&"|") Then

' content should be rendered as table - so don't interfere with it

objFile.WriteLine(vbCRLF)

else

'let's add the name of the object

objFile.WriteLine(vbCRLF&vbCRLF&"."&name)

End If

objFile.WriteLine(vbCRLF&strNotes)

objFile.Close

if (prefix<>"") Then

' write the same to a second file

set objFile = objFSO.OpenTextFile(path&prefix&".ad",ForAppending, True)

objFile.WriteLine(vbCRLF&vbCRLF&"."&name&vbCRLF&strNotes)

objFile.Close

End If

End If

End Sub

Sub SyncJira(currentModel, currentDiagram)

notes = currentDiagram.notes

set currentPackage = Repository.GetPackageByID(currentDiagram.PackageID)

updated = 0

created = 0

If (Left(notes, 6) = "{jira:") Then

WScript.echo " >>>> Diagram jira tag found"

strSearch = Mid(notes,7,InStr(notes,"}")-7)

Set objShell = CreateObject("WScript.Shell")

'objShell.CurrentDirectory = fso.GetFolder("./scripts")

Set objExecObject = objShell.Exec ("cmd /K groovy ./scripts/exportEAPJiraPrintHelper.groovy """ & strSearch &""" & exit")

strReturn = ""

x = 0

y = 0

Do While Not objExecObject.StdOut.AtEndOfStream

output = objExecObject.StdOut.ReadLine()

' WScript.echo output

jiraElement = Split(output,"|")

name = jiraElement(0)&":"&vbCR&vbLF&jiraElement(4)

On Error Resume Next

Set requirement = currentPackage.Elements.GetByName(name)

On Error Goto 0

if (IsObject(requirement)) then

' element already exists

requirement.notes = ""

requirement.notes = requirement.notes&"<a href='"&jiraElement(5)&"'>"&jiraElement(0)&"</a>"&vbCR&vbLF

requirement.notes = requirement.notes&"Priority: "&jiraElement(1)&vbCR&vbLF

requirement.notes = requirement.notes&"Created: "&jiraElement(2)&vbCR&vbLF

requirement.notes = requirement.notes&"Assignee: "&jiraElement(3)&vbCR&vbLF

requirement.Update()

updated = updated + 1

else

Set requirement = currentPackage.Elements.AddNew(name,"Requirement")

requirement.notes = ""

requirement.notes = requirement.notes&"<a href='"&jiraElement(5)&"'>"&jiraElement(0)&"</a>"&vbCR&vbLF

requirement.notes = requirement.notes&"Priority: "&jiraElement(1)&vbCR&vbLF

requirement.notes = requirement.notes&"Created: "&jiraElement(2)&vbCR&vbLF

requirement.notes = requirement.notes&"Assignee: "&jiraElement(3)&vbCR&vbLF

requirement.Update()

currentPackage.Elements.Refresh()

Set dia_obj = currentDiagram.DiagramObjects.AddNew("l="&(10+x*200)&";t="&(10+y*50)&";b="&(10+y*50+44)&";r="&(10+x*200+180),"")

x = x + 1

if (x>3) then

x = 0

y = y + 1

end if

dia_obj.ElementID = requirement.ElementID

dia_obj.Update()

created = created + 1

end if

Loop

Set objShell = Nothing

WScript.echo "created "&created&" requirements"

WScript.echo "updated "&updated&" requirements"

End If

End Sub

' This sub routine checks if the format string defined in diagramAttributes

' does contain any characters. It replaces the known placeholders:

' %DIAGRAM_AUTHOR%, %DIAGRAM_CREATED%, %DIAGRAM_GUID%, %DIAGRAM_MODIFIED%,

' %DIAGRAM_NAME%, %DIAGRAM_NOTES%

' with the attribute values read from the EA diagram object.

' None, one or multiple number of placeholders can be used to create a diagram attribute

' to be added to the document. The attribute string is stored as a file with the same

' path and name as the diagram image, but with suffix .ad. So, it can

' easily be included in an asciidoc file.

Sub SaveDiagramAttribute(currentDiagram, path, diagramName)

If Len(diagramAttributes) > 0 Then

filledDiagAttr = diagramAttributes

set objFSO = CreateObject("Scripting.FileSystemObject")

filename = objFSO.BuildPath(path, diagramName & ".ad")

set objFile = objFSO.OpenTextFile(filename, ForWriting, True)

filledDiagAttr = Replace(filledDiagAttr, "%DIAGRAM_AUTHOR%", currentDiagram.Author)

filledDiagAttr = Replace(filledDiagAttr, "%DIAGRAM_CREATED%", currentDiagram.CreatedDate)

filledDiagAttr = Replace(filledDiagAttr, "%DIAGRAM_GUID%", currentDiagram.DiagramGUID)

filledDiagAttr = Replace(filledDiagAttr, "%DIAGRAM_MODIFIED%", currentDiagram.ModifiedDate)

filledDiagAttr = Replace(filledDiagAttr, "%DIAGRAM_NAME%", currentDiagram.Name)

filledDiagAttr = Replace(filledDiagAttr, "%DIAGRAM_NOTES%", currentDiagram.Notes)

filledDiagAttr = Replace(filledDiagAttr, "%NEWLINE%", vbCrLf)

objFile.WriteLine(filledDiagAttr)

objFile.Close

End If

End Sub

Sub SaveDiagram(currentModel, currentDiagram)

Dim exportDiagram ' As Boolean

' Open the diagram

Repository.OpenDiagram(currentDiagram.DiagramID)

' Save and close the diagram

set objFSO = CreateObject("Scripting.FileSystemObject")

If (currentModel.Name="Model") Then

' When we work with the default model, we don't need a sub directory

path = objFSO.BuildPath(exportDestination,"/images/ea/")

Else

path = objFSO.BuildPath(exportDestination,"/images/ea/" & NormalizeName(currentModel.Name) & "/")

End If

path = objFSO.GetAbsolutePathName(path)

MakeDir(path)

diagramName = currentDiagram.Name

diagramName = Replace(diagramName,vbCr,"")

diagramName = Replace(diagramName,vbLf,"")

diagramName = NormalizeName(diagramName)

filename = objFSO.BuildPath(path, diagramName & ".png")

exportDiagram = True

If objFSO.FileExists(filename) Then

WScript.echo " --- " & filename & " already exists."

If Len(additionalOptions) > 0 Then

If InStr(additionalOptions, "KeepFirstDiagram") > 0 Then

WScript.echo " --- Skipping export -- parameter 'KeepFirstDiagram' set."

Else

WScript.echo " --- Overwriting -- parameter 'KeepFirstDiagram' not set."

exportDiagram = False

End If

Else

WScript.echo " --- Overwriting -- parameter 'KeepFirstDiagram' not set."

End If

End If

If exportDiagram Then

projectInterface.SaveDiagramImageToFile(filename)

WScript.echo " extracted image to " & filename

If Not IsEmpty(diagramAttributes) Then

SaveDiagramAttribute currentDiagram, path, diagramName

End If

End If

Repository.CloseDiagram(currentDiagram.DiagramID)

' Write the note of the diagram

WriteNote currentModel, currentDiagram, currentDiagram.Notes, diagramName&"_notes"

For Each diagramElement In currentDiagram.DiagramObjects

Set currentElement = Repository.GetElementByID(diagramElement.ElementID)

WriteNote currentModel, currentElement, currentElement.Notes, diagramName&"_notes"

Next

For Each diagramLink In currentDiagram.DiagramLinks

set currentConnector = Repository.GetConnectorByID(diagramLink.ConnectorID)

WriteNote currentModel, currentConnector, currentConnector.Notes, diagramName&"_links"

Next

End Sub

'

' Recursively saves all diagrams under the provided package and its children

'

Sub DumpDiagrams(thePackage,currentModel)

Set currentPackage = thePackage

' export element notes

For Each currentElement In currentPackage.Elements

WriteNote currentModel, currentElement, currentElement.Notes, ""

' export connector notes

For Each currentConnector In currentElement.Connectors

' WScript.echo currentConnector.ConnectorGUID

if (currentConnector.ClientID=currentElement.ElementID) Then

WriteNote currentModel, currentConnector, currentConnector.Notes, ""

End If

Next

if (Not currentElement.CompositeDiagram Is Nothing) Then

SyncJira currentModel, currentElement.CompositeDiagram

SaveDiagram currentModel, currentElement.CompositeDiagram

End If

if (Not currentElement.Elements Is Nothing) Then

DumpDiagrams currentElement,currentModel

End If

Next

' Iterate through all diagrams in the current package

For Each currentDiagram In currentPackage.Diagrams

SyncJira currentModel, currentDiagram

SaveDiagram currentModel, currentDiagram

Next

' Process child packages

Dim childPackage 'as EA.Package

' otPackage = 5

if (currentPackage.ObjectType = 5) Then

For Each childPackage In currentPackage.Packages

call DumpDiagrams(childPackage, currentModel)

Next

End If

End Sub

Function SearchEAProjects(path)

For Each folder In path.SubFolders

SearchEAProjects folder

Next

For Each file In path.Files

If fso.GetExtensionName (file.Path) = "eap" OR fso.GetExtensionName (file.Path) = "eapx" Then

WScript.echo "found "&file.path

If (Left(file.name, 1) = "_") Then

WScript.echo "skipping, because it start with `_` (replication)"

Else

OpenProject(file.Path)

End If

End If

Next

End Function

'Gets the package object as referenced by its GUID from the Enterprise Architect project.

'Looks for the model node, the package is a child of as it is required for the diagram export.

'Calls the Sub routine DumpDiagrams for the model and package found.

'An error is printed to console only if the packageGUID is not found in the project.

Function DumpPackageDiagrams(EAapp, packageGUID)

WScript.echo "DumpPackageDiagrams"

WScript.echo packageGUID

Dim package

Set package = EAapp.Repository.GetPackageByGuid(packageGUID)

If (package Is Nothing) Then

WScript.echo "invalid package - as package is not part of the project"

Else

Dim currentModel

Set currentModel = package

while currentModel.IsModel = false

Set currentModel = EAapp.Repository.GetPackageByID(currentModel.parentID)

wend

' Iterate through all child packages and save out their diagrams

' save all diagrams of package itself

call DumpDiagrams(package, currentModel)

End If

End Function

Function FormatStringToJSONString(inputString)

outputString = Replace(inputString, "\", "\\")

outputString = Replace(outputString, """", "\""")

outputString = Replace(outputString, vbCrLf, "\n")

outputString = Replace(outputString, vbLf, "\n")

outputString = Replace(outputString, vbCr, "\n")

FormatStringToJSONString = outputString

End Function

'If a valid file path is set, the glossary terms are read from EA repository,

'formatted in a JSON compatible format and written into file.

'The file is read and reformatted by the exportEA gradle task afterwards.

Function ExportGlossaryTermsAsJSONFile(EArepo)

If (Len(glossaryFilePath) > 0) Then

set objFSO = CreateObject("Scripting.FileSystemObject")

GUID = Replace(EArepo.ProjectGUID,"{","")

GUID = Replace(GUID,"}","")

currentGlossaryFile = objFSO.BuildPath(glossaryFilePath,"/"&GUID&".ad")

set objFile = objFSO.OpenTextFile(currentGlossaryFile,ForAppending, True)

Set glossary = EArepo.Terms()

objFile.WriteLine("[")

dim counter

counter = 0

For Each term In glossary

if (counter > 0) Then

objFile.Write(",")

end if

objFile.Write("{ ""term"" : """&FormatStringToJSONString(term.term)&""", ""meaning"" : """&FormatStringToJSONString(term.Meaning)&""",")

objFile.WriteLine(" ""termID"" : """&FormatStringToJSONString(term.termID)&""", ""type"" : """&FormatStringToJSONString(term.type)&""" }")

counter = counter + 1

Next

objFile.WriteLine("]")

objFile.Close

End If

End Function

Sub OpenProject(file)

' open Enterprise Architect

Set EAapp = CreateObject("EA.App")

WScript.echo "opening Enterprise Architect. This might take a moment..."

' load project

EAapp.Repository.OpenFile(file)

' make Enterprise Architect to not appear on screen

EAapp.Visible = False

' get repository object

Set Repository = EAapp.Repository

' Show the script output window

' Repository.EnsureOutputVisible("Script")

call ExportGlossaryTermsAsJSONFile(Repository)

Set projectInterface = Repository.GetProjectInterface()

Dim childPackage 'As EA.Package

' Iterate through all model nodes

Dim currentModel 'As EA.Package

If (InStrRev(file,"{") > 0) Then

' the filename references a GUID

' like {04C44F80-8DA1-4a6f-ECB8-982349872349}

WScript.echo file

GUID = Mid(file, InStrRev(file,"{")+0,38)

WScript.echo GUID

' Iterate through all child packages and save out their diagrams

call DumpPackageDiagrams(EAapp, GUID)

Else

If packageFilter.Count = 0 Then

WScript.echo "done"

' Iterate through all model nodes

For Each currentModel In Repository.Models

' Iterate through all child packages and save out their diagrams

For Each childPackage In currentModel.Packages

call DumpDiagrams(childPackage,currentModel)

Next

Next

Else

' Iterate through all packages found in the package filter given by script parameter.

For Each packageGUID In packageFilter

call DumpPackageDiagrams(EAapp, packageGUID)

Next

End If

End If

EAapp.Repository.CloseFile()

' Since EA 15.2 the Enterprise Architect background process hangs without calling Exit explicitly

EAapp.Repository.Exit()

End Sub

Private connectionString

Private packageFilter

Private exportDestination

Private searchPath

Private glossaryFilePath

Private diagramAttributes

Private additionalOptions

exportDestination = "./src/docs"

searchPath = "./src"

Set packageFilter = CreateObject("System.Collections.ArrayList")

Set objArguments = WScript.Arguments

Dim argCount

argCount = 0

While objArguments.Count > argCount+1

Select Case objArguments(argCount)

Case "-c"

connectionString = objArguments(argCount+1)

Case "-p"

packageFilter.Add objArguments(argCount+1)

Case "-d"

exportDestination = objArguments(argCount+1)

Case "-s"

searchPath = objArguments(argCount+1)

Case "-g"

glossaryFilePath = objArguments(argCount+1)

Case "-da"

diagramAttributes = objArguments(argCount+1)

Case "-ao"

additionalOptions = objArguments(argCount+1)

End Select

argCount = argCount + 2

WEnd

set fso = CreateObject("Scripting.fileSystemObject")

WScript.echo "Image extractor"

' Check both types in parallel - 1st check Enterprise Architect database connection, 2nd look for local project files

If Not IsEmpty(connectionString) Then

WScript.echo "opening database connection now"

OpenProject(connectionString)

End If

WScript.echo "looking for .eap(x) files in " & fso.GetAbsolutePathName(searchPath)

' Dim f As Scripting.Files

SearchEAProjects fso.GetFolder(searchPath)

WScript.echo "finished exporting images"3.14. exportVisio

1 minute to read

This tasks searches for Visio files in the /src/docs folder.

It then exports all diagrams and element notes to /src/docs/images/visio and /src/docs/visio.

-

Images are stored as

/images/visio/[filename]-[pagename].png -

Notes are stored as

/visio/[filename]-[pagename].adoc

You can specify a file name to which the notes of a diagram are exported by starting any comment with {adoc:[filename].adoc}.

It will then be written to /visio/[filename].adoc.

Currently, only Visio files stored directly in /src/docs are supported.

For all others, the exported files will be in the wrong location.

|

| Please close any running Visio instance before starting this task. |

| Todos: issue #112 |

3.14.1. Source

task exportVisio(

dependsOn: [streamingExecute],

description: 'exports all diagrams and notes from visio files',

group: 'docToolchain'

) {

doLast {

//make sure path for notes exists

//and remove old notes

new File(docDir, 'src/docs/visio').deleteDir()

//also remove old diagrams

new File(docDir, 'src/docs/images/visio').deleteDir()

//create a readme to clarify things

def readme = """This folder contains exported diagrams and notes from visio files.

Please note that these are generated files but reside in the `src`-folder in order to be versioned.

This is to make sure that they can be used from environments other than windows.

# Warning!

**The contents of this folder will be overwritten with each re-export!**

use `gradle exportVisio` to re-export files

"""

new File(docDir, 'src/docs/images/visio/.').mkdirs()

new File(docDir, 'src/docs/images/visio/readme.ad').write(readme)

new File(docDir, 'src/docs/visio/.').mkdirs()

new File(docDir, 'src/docs/visio/readme.ad').write(readme)

def sourcePath = new File(docDir, 'src/docs/.').canonicalPath

def scriptPath = new File(projectDir, 'scripts/VisioPageToPngConverter.ps1').canonicalPath

"powershell ${scriptPath} -SourcePath ${sourcePath}".executeCmd()

}

}# Convert all pages in all visio files in the given directory to png files.

# A Visio windows might flash shortly.

# The converted png files are stored in the same directory

# The name of the png file is concatenated from the Visio file name and the page name.

# In addtion all the comments are stored in adoc files.

# If the Viso file is named "MyVisio.vsdx" and the page is called "FirstPage"

# the name of the png file will be "MyVisio-FirstPage.png" and the comment will

# be stored in "MyVisio-FirstPage.adoc".

# But for the name of the adoc files there is an alternative. It can be given in the first

# line of the comment. If it is given in the comment it has to be given in curly brackes

# with the prefix "adoc:", e.g. {adoc:MyCommentFile.adoc}

# Prerequisites: Viso and PowerShell has to be installed on the computer.

# Parameter: SourcePath where visio files can be found

# Example powershell VisoPageToPngConverter.ps1 -SourcePath c:\convertertest\

Param

(

[Parameter(Mandatory=$true,ValueFromPipeline=$true,Position=0)]

[Alias('p')][String]$SourcePath

)

Write-Output "starting to export visio"

If (!(Test-Path -Path $SourcePath))

{

Write-Warning "The path ""$SourcePath"" does not exist or is not accessible, please input the correct path."

Exit

}

# Extend the source path to get only Visio files of the given directory and not in subdircetories

If ($SourcePath.EndsWith("\"))

{

$SourcePath = "$SourcePath"

}

Else

{

$SourcePath = "$SourcePath\"

}

$VisioFiles = Get-ChildItem -Path "$SourcePath*" -Recurse -Include *.vsdx,*.vssx,*.vstx,*.vxdm,*.vssm,*.vstm,*.vsd,*.vdw,*.vss,*.vst

If(!($VisioFiles))

{

Write-Warning "There are no Visio files in the path ""$SourcePath""."

Exit

}

$VisioApp = New-Object -ComObject Visio.Application

$VisioApp.Visible = $false

# Extract the png from all the files in the folder

Foreach($File in $VisioFiles)

{

$FilePath = $File.FullName

Write-Output "found ""$FilePath"" ."

$FileDirectory = $File.DirectoryName # Get the folder containing the Visio file. Will be used to store the png and adoc files

$FileBaseName = $File.BaseName -replace '[ :/\\*?|<>]','-' # Get the filename to be used as part of the name of the png and adoc files

Try

{

$Document = $VisioApp.Documents.Open($FilePath)

$Pages = $VisioApp.ActiveDocument.Pages

Foreach($Page in $Pages)

{

# Create valid filenames for the png and adoc files

$PngFileName = $Page.Name -replace '[ :/\\*?|<>]','-'

$PngFileName = "$FileBaseName-$PngFileName.png"

$AdocFileName = $PngFileName.Replace(".png", ".adoc")

#TODO: this needs better logic

Write-Output("$SourcePath\images\visio\$PngFileName")

$Page.Export("$SourcePath\images\visio\$PngFileName")

$AllPageComments = ""

ForEach($PageComment in $Page.Comments)

{

# Extract adoc filename from comment text if the syntax is valid

# Remove the filename from the text and save the comment in a file with a valid name

$EofStringIndex = $PageComment.Text.IndexOf(".adoc}")

if ($PageComment.Text.StartsWith("{adoc") -And ($EofStringIndex -gt 6))

{

$AdocFileName = $PageComment.Text.Substring(6, $EofStringIndex -1)

$AllPageComments += $PageComment.Text.Substring($EofStringIndex + 6)

}

else

{

$AllPageComments += $PageComment.Text+"`n"

}

}

If ($AllPageComments)

{